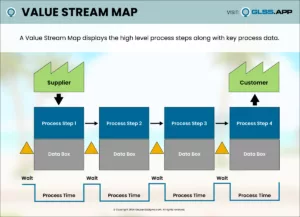

Lean Six Sigma solves problems by applying tools through a specific process—DMAIC (Define, Measure, Analyze, Improve and Control). This process is not simply a way of organizing the work, but a way to connect the use of the tools to achieve successful results. I call this “connecting the dots” and below illustrates the critical dots and how to connect them.

Meet the Dots

The “dots” are specific deliverables—what you create as part of your process documentation. Dots are connected through a thought process that leads from one dot to the next—the output of one dot helps define the way the next dot works. While we can think of every DMAIC deliverable as a dot, I’ll be focusing on the major ones:

- Goal Statement

- Data Collection Plan

- Baseline Performance

- Root Cause Identification

- Root Cause Confirmation

- Solutions to Address Root Cause

- Verification of Improvement

- Monitoring & Response Plan

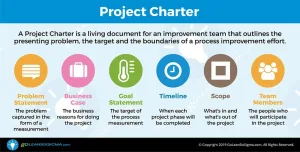

Goal Statement

This isn’t simply a declaration of what we are trying to achieve—it drives how we apply the tools. Each of the ensuing dots are driven by the Goal Statement, as well as by previous dots. It clarifies the direction of improvement, along with “from” and “to” values and a target date.

Try our Goal Statement Builder to help you streamline this first and crucial step.

Data Collection Plan

We collect data for two purposes:

- To establish (or confirm) baseline performance

- To find clues to possible causes

The Data Collection Plan should always measure the performance in terms of the Goal Statement metric. Consider what conditions might make performance vary—by shift, location, day of the week or transaction type. We call these stratification factors and record them as we gather our data. Later we analyze the data to determine which of them makes a difference—differences become clues to root causes. We can collect other information of interest, but process performance over a time period is essential to building the baseline.

Baseline Performance

The process performance is plotted in a Run Chart to show the process performance over time. We normally expect to see a random pattern. Any non-random features, such as trends, cycling, clustering, shifts or extreme high and low points suggest that some cause is acting upon the process. Digging deeper into these patterns can often surface clues to root causes.

If we collected stratification factors, we can sort the data to find performance differences. These can be seen by plotting Box Plots for each value or using an Analysis of Variance (ANOVA).

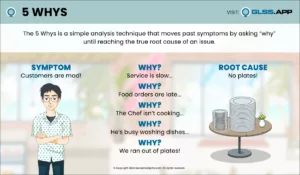

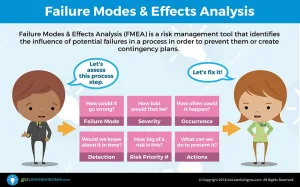

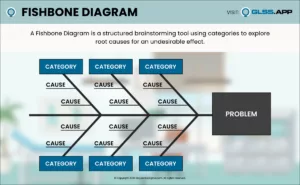

Root Cause Identification

Analysis of the Baseline Performance will often give us clues to potential root causes. We dig deeper to find what’s behind the data clues. Those clues are not root causes but may signal that they’re nearby. For instance, if you find that there is a difference between the day shift and the night shift, we cannot conclude that the shift is a root cause! Our process is not smart enough to misbehave on certain shifts. Rather, there is something different happening on different shifts—perhaps a somewhat different process. Those differences are either a root cause, or close to it.

Root Cause Confirmation

We work to confirm suspected root causes, either through process observation or data analysis. If the problem we are trying to solve has been around awhile, chances are that others have already tried to solve it—some with great confidence. Long-standing problems, sometimes called hardy perennials, persist because people assumed they knew the cause, but missed the true root cause. We have to confirm the suspected root causes in order to develop solutions with confidence.

To confirm a root cause, we intervene in the process and either temporarily remove the root cause or do something to neutralize its effect. We then run the process for a short time and measure the performance. If it is clearly better, we have confirmed the root cause. If not, we keep looking further.

Solutions

Once we confirm a root cause, we should consider how to act on what we have learned. Our solutions should always include some action to neutralize a root cause. While we can add anything that makes sense, at least one of the solutions has to be based on a confirmed root cause.

Verification

If our solutions are effective, the process performance should prove it. We continue to monitor process performance, extending the the Run Chart from the baseline performance past our solution implementation. Once again, we are measuring the metric targeted in the Goal Statement, and we fully expect to see a favorable shift in performance.

Monitoring and Response Plan

Once we confirm improvement, we need to be sure it lasts, so we continue to measure the performance. We set triggers points—levels to “go no higher than” or “go no lower than”—and take corrective action when the process fails to perform as expected. We should also track leading indicators—input measures or upstream process measures—that signal a problem before it emerges. Acting on leading indicators helps us respond in time to prevent poor process performance.

Summary

By keeping the connections between the dots in mind, we can ensure we are using the DMAIC tools to efficiently solve a problem. It also helps us tell our story convincingly with our Storyboard. I look for these when reviewing Storyboards, and often find a few missing “dots.”